Kelly Criterion Formula

Kelly Criterion In probability theory, the Kelly Criterion is a formula used to determine the optimal size of a series of bets. In most gambling scenarios, and some investing scenarios under some simplifying assumptions, the Kelly strategy will do better than any essentially different strategy in the long run. Mar 27, 2015 Kelly’s criterion is a good start, but it’s not the full picture. If you visualize the relationship between balance growth and the% of risk, it will look like this: From here we can witness the same pattern as we noticed before – to the left of one Kelly return increases as you increase risk.

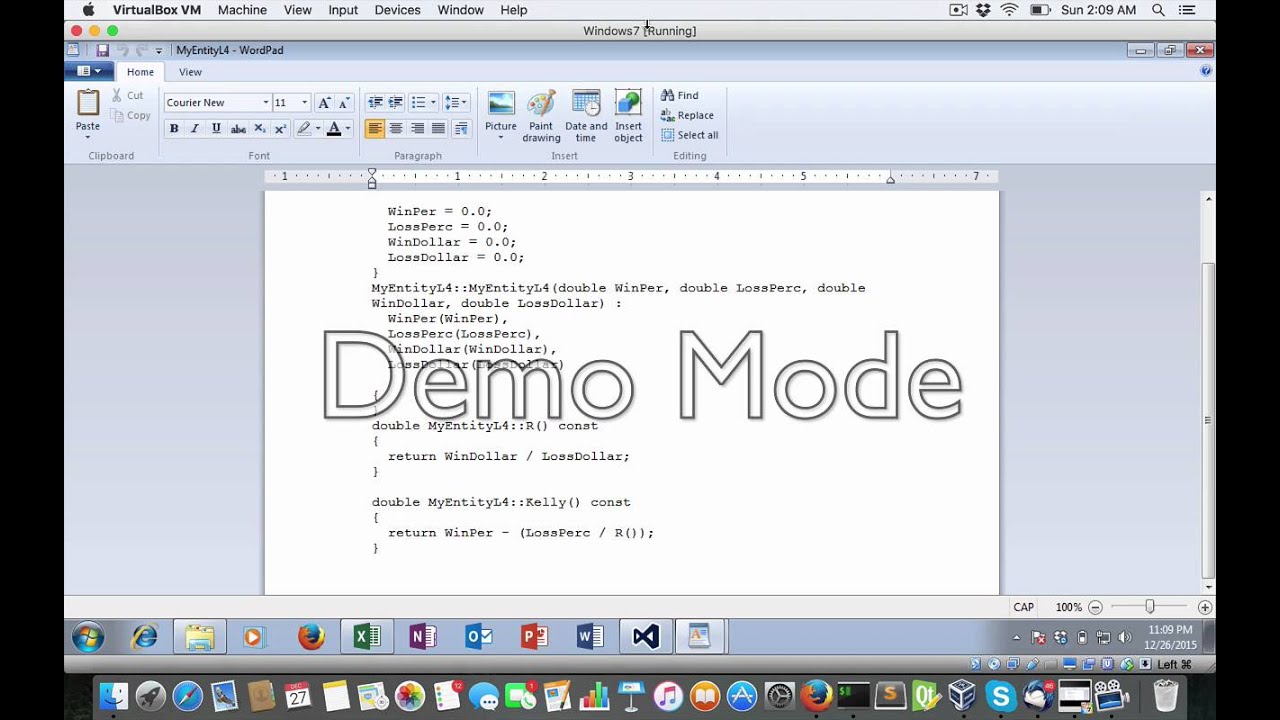

In probability theory and intertemporal portfolio choice, the Kelly criterion (or Kelly strategy or Kelly bet), also known as the scientific gambling method, is a formula for bet sizing that leads almost surely to higher wealth compared to any other strategy in the long run (i.e. Approaching the limit as the number of bets goes to infinity). Oct 05, 2020 The Kelly Criterion is a formula used to bet a preset fraction of an account. It can seem counterintuitive in real time. The Kelly formula is: Kelly% = W – (1-W)/R where: Kelly% = percentage of capital to be put into a single trade. W = Historical winning percentage of a trading system. R = Historical Average Win/Loss ratio. Sep 01, 2017 The answer is that the formula commonly known as the Kelly Criterion is not the real Kelly Criterion - it is a simplified form that works when there is only one bet at a time. How to use the “real” or generalised Kelly Criterion.

Introduction

The Kelly Criterion is well-known among gamblers as a way to decide how much to bet when the odds are in your favor. Most only know a simplified version. We will show why that holds, but our main goal is to explain the full version. And to give some numerical tools to play with it.

The simple rule goes like this. Suppose that with probability $p$ you will make a profit of $b$ times what you bet, and otherwise you lose the bet. Then the optimal amount to bet is $frac{pb - (1-p)}{b}$. (Note that $p$ is a number from $0$ to $1$. So $50%$ probability means that $p$ is $0.5$.) Gamblers call $pb - (1-p)$ their edge, and $b$ their odds leading to the simple rule bet edge over odds.

This is fine for the simple case. But the simple rule doesn't cover most real world situations. For instance take the case of a poker tournament where you think you have a $5%$ chance of winning, and multiplying your stake by $20$, and you have an additional $20%$ chance of winding up in the money, and making a $10%$ return. How big a buy-in should you be willing to pay? Suppose you're horse racing, and you think that 2 of the horses are priced wrong, how much should you bet on each? Why do people recommend betting less than the theoretically optimal amount?

The answers to these questions can be complex. When it is finished this tutorial will explain all of those details, and will give you a calculator to do the math with. (The calculator exists and is useful, but doesn't yet compute the optimal allocations to bet. However for the case of a single bet with multiple outcomes, this calculator will.)

Big-O and little-o

We will be talking about approximations, so we need a language to do it with. In general we start with some complicated function $f(n)$, and try to write it as some approximation $a(n)$ plus some error $e(n)$. In general we hope that the approximation is simple, and the error is small. So we need an easy way to say how small the error is without getting into the details of what that error is.

The standard language for this involves the terms Big-O and little-o. Informally these terms mean 'up to the same general size as' and 'grows more slowly than' respectively. More precisely, if for all 'large enough' $n$, $e(n)$ is bounded by a constant times $h(n)$ then we say that $e(n) = O(h(n))$. If $ frac{e(n)}{h(n)} $ goes to $0$ as $n$ goes to $infty$ then we say that $e(n) = o(h(n))$.

The links provide even more precise definitions for those who are interested in the formalities. We won't go there. If you stand that $sqrt{n} = o(n)$ and a $o(1)$ term vanishes as $n$ goes to $infty$, then you've got the concept.

Kelly Criterion Formula

Average Rate of Return

Suppose that you're a lucky gambler who has found a bet which you come out ahead on that you can play over and over again, and you've decided on an investment strategy which is to bet a fixed fraction of your net worth on the bet each round. What is your average rate of return in the long term? How do we figure that out?

The trick to math problems like this is to start by setting them up, and get as far as you can. You may not know how to finish, but sometimes you get to the end without problems, and other times you at least make your problem clear.

In this case our investment strategy is going to multiply our net worth in each round by a random variable $X$. If our starting worth is $w_0$, then our worth $w_n$ after $n$ rounds will be $w X_1 X_2 ldots X_n$ where $X_1, ldots, X_n$ are the $n$ random outcomes of our bets.

The problem we have is that we're faced with repeated multiplication here. We know how to do statistics with addition, not multiplication. Luckily there is a function $log$ that turns multiplication into addition. And we can always use the trick that $y = e^{log(y)}$. (Note that $e$ is the natural exponent $2.71828ldots$ and $log$ is the natural logarithm. In grade school math logarithms are base 10, but all advanced math uses base $e$.) Using that trick we get: $$begin{eqnarray} w_n & = & w_0 X_1 X_2 ldots X_n & = & e^{log(w_0 X_1 X_2 ldots X_n)} & = & e^{log(w_0) + log(X_1) + log(X_2) + ldots + log(X_n)} end{eqnarray}$$ This is ugly, but how does this help us? Well we apply the Law of Large Numbers. That says that if $Y$ is a random variable, then the sum of $n$ independent samples $Y_1, ldots, Y_n$ of $Y$ is $E(Y)n + o(n)$ where $E(Y)$ is the expected average of $Y$.

In this case our random variable is $log(X)$. So we get: $$begin{eqnarray} w_n & = & e^{log(w_0) + log(X_1) + log(X_2) + ldots + log(X_n)} & = & e^{log(w_0) + E(log(X))n + o(n)} & = & e^{log(w_0)} e^{E(log(X))n + o(1)n} & = & w_0 (e^{E(log(X)) + o(1)})^n & = & w_0 (e^{E(log(X))}(1 + o(1)))^n end{eqnarray}$$ In the long run the $o(1)$ term disappears, and the gambler's net worth is multiplied by approximately $e^{E(log(X))}$ each bet for an average rate of return of $e^{E(log(X))} - 1$ per bet.

Rate of Return Example

That's a lot of theory. Let's do an example to try to understand what it says. Suppose that our gambler has probability $0.6$ of doubling the money that is bet each time, and the rest sits somewhere safe. Our intrepid gambler will put $1/3$ of his money into this bet each round. What is the long term average rate of return of this strategy?

Well with probability $0.4$ our gambler loses and his net worth is multiplied by $2/3$. Otherwise his net worth is multiplied by $1frac{1}{3}$. Therefore his long term average rate of return per bet is $$begin{eqnarray} e^{E(log(X))} - 1 & = & e^{0.4 log(frac{2}{3}) * 0.6 log(1frac{1}{3})} - 1 & = & e^{0.4*(-0.405465ldots) + 0.6*0.287682ldots} - 1 & = & e^{0.0104230ldots} - 1 & = & 1.01047771ldots - 1 & = & 1.047771ldots % end{eqnarray}$$ On the face of it this is pretty good. Each $50$ bets the gambler will average almost a $70 %$ return on investment. But what happens after $50$ bets if the gambler loses one more bet than expected? Well instead of multiplying his net worth by $1frac{1}{3}$ he multiplied it by $frac{2}{3}$. He makes half as much and is losing money. In fact it turns out that he has a $49.3 %$ chance of being behind after $50$ bets. If he's off by $2$ bets he's lost most of his net worth. And, of course, if he's slightly wrong on his odds then he'll lose money. This is why experienced gamblers pay attention to their variance, which leads us into the next section.

Understanding Variance

Kelly Criterion Formula Python

All wise gamblers and investors know how easy it is to go broke doing something that should work in the long term. Gamblers call the reason variance - there can be large fluctuations on the way to your long term average, and that variation in net worth can leave you without the resources to live your life. Obviously gambling involves taking risks. However you need to make your risks manageable. But before you can properly manage them, you need to understand them.

Variance as gamblers use it unfortunately doesn't have a precise mathematical definition. Worse yet, mathematicians have a number of terms they use, and none of them are exactly what gamblers need. Here is a short list:

- Expected Value: What most people mean by average. One of the key facts is that the expected value of a sum of random variables is the sum of their expected values.

- Deviation: The difference between actual and expected results.

- Variance: The expected value of the square of the deviation. This is usually not directly applicable to most problems, but has some nice mathematical properties such as the variance of the sum of independent random variables being the sum of the variances.

- Standard Deviation: The square root of the variance. This gives an order of magnitude estimate of how big deviations tend to be. With a normal distribution those estimates can be made precise. For instance $68%$ of the time you'll be within one standard deviation of the average, and $95%$ of the time you're within two.

Let me explain this in more detail. Suppose we have some betting strategy that will multiply our net worth after each round by a random variable $X$. We want to estimate where we'd be if, say, we were unlucky enough to be in the $10$th percentile after $n$ bets. What kind of calculation would we have to do?

Well we're going to estimate the $log$ of our net worth, then take an exponential. As above we can measure $E(log(X))$, let's call that value $mbox{irr}$. (That's short for instantaneous rate of return but let's not go into the reasoning behind that name.) We can also measure the variance, take its square root and come up with a standard deviation. Let's call that standard deviation $mbox{vol}$ for volatility for reasons that will become clear. And the variance is $mbox{vol}^2$.

With those measured, we use the fact that both expected values and variances sum. Therefore the sum of $n$ measurements of $log(X)$ has expected value $n mbox{ irr}$ and variance $n mbox{ vol}^2$. The standard deviation is the square root of the variance, which is $mbox{vol } sqrt{n}$. We then go to a lookup table and find that the $10$th percentile is about $1.28$ standard deviations out. Which is $n mbox{ irr} + 1.28 mbox{ vol }sqrt{n}$. We can then take an exponential to estimate our net worth, take an $n$th root of that to figure out our rate of return, etc.

It is worth asking how accurate this estimate is. The Berry-Esseen theorem can be applied to tell us. It tells us that our error in estimating the $log$ is $O(frac{1}{sqrt{n}})$. With calculus it can be shown that the error in locating the percentile line is $O(1)$. What the constant is depends on which percentile you're looking at. You're going to locate the $30$th percentile more accurately than the $10$th which is in turn more accurate than the $1$st. When you take an exponential this constant error will turn into being off by up to a constant factor.Now it may seem bad to be off by a constant factor, but that is unavoidable. In the rate of return example we noticed that the possible returns after $50$ bets came with factor of $2$ jumps. A continuous approximation has to be wrong by at least $41%$ somewhere. Besides we're not looking at a particular percentile because we want an exact answer, but instead to get an idea what our risk is. And it does that.

Rate of Return Calculator

Doing the calculations for the rate of return example was painful. When you add in calculating the volatility (ie standard deviation of $log(X)$) then calculating confidence intervals, it gets much worse. And as a double check it might be nice to simulate a few thousand trial runs for a Monte Carlo simulation. But who has the energy to do that? Surely no self-respecting degenerate gambler would admit to doing something that looks so much like work.

That's what computers were invented for. If only someone would build an online calculator, then we could just punch numbers in, let the computer do the work, then we could look at the results. But who would build that? :-)

Here is the rate example. The numbers entered say that we're betting $frac{1}{3}$ of our wealth, we win $frac{3}{5} = 60%$ of the time, and when we win we double the money bet. Just press calculate and the calculator does the rest for us. It even lets us figure out where given percentiles will fall after a given number of bets. You can do that either using the normal approximation or by running a Monte Carlo simulation.

Here is a list of what it gives and what they mean:

- E(log(X)): This is the average of what a bet does to the log of your net worth.

- Average Rate of Return: If you follow the betting strategy for a long time, your final return should be close to earning this rate per bet. (With compounding returns.)

- Volatility: The standard deviation of what happens to the log of your net worth. This number drives how much your real returns will bounce above or below the long term average in the short run.

- Volatility ratio: The absolute value of Volatility/E(log(X)). This is a measure of risk. If your average rate of return is positive and this is below 5, you're unlikely to be losing money after 50 bets. If this is below 7 then you're unlikely to be losing money after 100 bets. If this is a lot higher than that, you'd better be ready for a financial roller-coaster.

- Percentile X, n bets - rate of return: After n bets, if your result is at percentile X, what effective interest rate did you get per bet (compounding)? This can be estimated through the normal approximation or a Monte Carlo simulation.

- Percentile X, n bets - final result: After n bets if your result is at percentile X, how much was your money multiplied by? This can be estimated through the normal approximation or a Monte Carlo simulation. The normal simulation may give somewhat unrealistic answers.

Now there is actually a second calculator that only can handle 1 bet. It is like the first but has the nice feature that you can automatically optimize allocations. That means that it figures out the right amount to bet for maximum returns before doing anything else. You can choose whether to maximize your long-term returns, or to optimize where you'd be if after a fixed number of bets you were at a particular scenario. As the note on the calculator says, it estimates returns using a normal approximation and then optimizes that. So the answers you get are good, but not perfect.

Deriving the Classic Rule (calculus)

(You should skip ahead if you don't know calculus.)

Suppose you have a simple bet where with probability $p$ you will make a profit of $b$ times what you bet, and otherwise you lose your bet. What is the optimal fraction of our bankroll to bet?

Well our average rate of return is determined by $E(log(X))$. If we're betting a fraction $x$ of our total bankroll then: $E(log(X)) = plog(1 + bx) + (1-p)log(1-x)$. To maximize this we need to find where the derivative is $0$. First let us find the derivative: $$begin{eqnarray} frac{d}{dx}(E(log(X))) & = & frac{d}{dx}(plog(1 + bx) + (1-p)log(1-x)) & = & pbfrac{1}{1+bx} + (1-p)(-1)frac{1}{1-x} & = & frac{pb}{1+bx} - frac{1-p}{1-x} end{eqnarray}$$ Now let us find where it is $0$: $$begin{eqnarray} 0 & = & frac{d}{dx}(E(log(X))) 0 & = & frac{pb}{1+bx} - frac{1-p}{1-x} 0 & = & pb(1-x) - (1-p)(1+bx) 0 & = & pb - pbx - 1 - bx + p + pbx bx & = & pb + p - 1 x & = & frac{pb - (1-p)}{b} end{eqnarray}$$ Which is the classic edge/odds equations that gamblers know and love.

Complex Bets have no Simple Rule (calculus)

That rule is simple and memorable, but what happens when the bet gets more complex? For instance in the introduction we brought up the case of a poker tournament where you think you have a $5%$ chance of winning, and multiplying your stake by $20$, and you have an additional $20%$ chance of winding up in the money, and making a $10%$ return. What is the optimal portion of your net worth to bet?

Well most gamblers would say, 'edge over odds'. But what are your odds? You have $1$ chance in $4$ of making something, but the bulk of your returns come in the $1$ chance in $20$ that you take it all. Do you weight things somehow? If so, then how?

Unfortunately there is no useful general rule. The general principle of optimizing the log of your net worth applies, but it won't give a simple formula that you can use. That's because there is no simple formula, at some point you need to use a mathematical approximation.

(You should skip ahead if you don't know calculus.)

Let's see this by trying to calculate Kelly for the simple scenario of the poker tournament.

As before our averge rate of return is determined by $E(log(X))$. If we're betting a fraction $x$ of our total bankroll then: $E(log(X)) = 0.75log(1-x) + 0.2log(1+0.1x) + 0.05log(1+19x)$ (The $19$ comes because we paid $x$ then won $20x$ so $19x$ is our profit.) Now let us find the derivative as we did before: $$begin{eqnarray} frac{d}{dx}(E(log(X))) & = & frac{d}{dx}(0.75log(1-x) + 0.2log(1+0.1x) + 0.05log(1+19x)) & = & -frac{0.75}{1-x} + frac{0.02}{1+0.1x} + frac{0.95}{1+19x} end{eqnarray}$$ Now let us find where it is $0$. $$begin{eqnarray} 0 & = & frac{d}{dx}(E(log(X))) 0 & = & -frac{0.75}{1-x} + frac{0.02}{1+0.1x} + frac{0.95}{1+19x} 0 & = & -0.75(1+0.1x)(1+19x) + 0.02(1-x)(1+19x) + 0.95(1-x)(1+0.1x) 0 & = & -0.75(1 + 19.1x + 1.9x^2) + 0.02(1+18x-19x^2) + 0.95(1-0.9x-0.1x^2) 0 & = & (-0.75 - 14.325x - 1.425x^2) + (0.02 + 0.36x - 0.38x^2) + (0.95 - 0.855x - .095x^2) 0 & = & (-0.75 + 0.02 + 0.95) + (-14.325 + 0.36 - 0.855)x + (-1.425 - 0.38 -0.095)x^2 0 & = & 0.22 -14.82x - 1.9 x^2 end{eqnarray}$$ And now we can apply the quadratic formula to get: $$begin{eqnarray} x & = & frac{14.82 pm sqrt{14.82^2-4*0.22*(-1.9)}}{2*(-1.9)} & = & frac{14.82 pm sqrt{221.3044}}{3.8} end{eqnarray}$$ There are two solutions. One is close to $30$, which would have us betting more than all of our money, and the other is $0.0148166590ldots$ which is the answer we are looking for.

Now we should double check this. We can set up the 1 bet calculator to compute these results like this. Now press 'Calculate' and you can see that the calculator verifies our answer.

Now let's reflect. With 2 possible outcomes we had a simple linear equation. When we had 3 possible outcomes we had a second degree equation that turned into a mess. The polynomial came from the step where multiplied out the denominators. Looking at that step you can see that if we had 4 possible outcomes we'd have an third degree polynomial, 5 possible outcomes would give us a fourth degree polynomial, and so on. Then to get the answer we have to find the roots of the polynomial. Which is hard, and is why there can be no simple rule. The calculation will be complicated, and complicated calculations should be given to a computer.

Betting Less than Kelly

Kelly Criterion Formula Stocks

Many people will tell you to bet less than the Kelly formula says to bet. Two reasons are generally given for this. The first is that gamblers tend to overestimate their odds of winning and so will naturally overbet. Betting less than the Kelly amount corrects for this. The other is that the Kelly formula leads to extreme volatility, and you should underbet to limit the chance of being badly down for unacceptably long stretches.

It is true that gamblers often overestimate their odds. However gamblers tend to misjudge the odds as well. If you do that, you'll lose consistently. If you're taking your betting seriously, you owe it to yourself to become as good as possible at estimating the odds. And if you become good enough that your estimates average out to correct independently of the bet offered, then the fact that sometimes your odds are off in a particular bet will average out. (But note 'independently of the bet offered'. If your $1/3$ odds averages out right, but is high when you're offered $1/4$ and low when you're offered $1/2$, then you've got a problem.

Of course that could be an impossible ideal. Certainly you won't do that when you start. However without knowing how badly you're estimating there is no way to figure out how far off you are. That said, the right way to account for that is to adjust the odds you think towards the odds being offered. How much should you adjust it? The only way to tell is to keep track of how good a job you're doing, and then for caution's sake assume that you're estimating a little worse than that. If you do this honestly, then over time your estimates should improve.

The volatility point is more subtle. Perhaps the best way to see it is to look at risk/returns. Let us return to the gambler who had $60%$ odds of doubling his bet and $40%$ odds of losing it. Kelly says that his edge is $0.2$ and his odds are $1$ so you should bet $0.2$ of your bankroll. Now let's look at the potential returns at different numbers of bets:

(I generated these graphs with this script. It is written in Perl and uses gnuplot to graph data. Unix, Linux and OS X come with Perl. Here is a free port for Windows users. Feel free to tweak, generate more graphs, etc.)

Looking at these please note several things. The first is that near the maximum returns at betting $0.2$ of your bankroll there is a flat area where the middle percentile doesn't change much as you change how much you bet. However the amount you stand to lose in the short run changes quite rapidly. If you wish to avoid short term volatility it is therefore worth betting something less than the theoretical maximum. How much less depends on your risk tolerance and planning horizon.

Therefore you should definitely bet something less than Kelly says. How much less? That depends on you. However if you're using the one bet calculator then you have the option to automatically optimize alloctions to maximize, say, your returns after 50 bets if you fell in the 10th percentile. That calculator exists for you to play around with and develop a sense of what your comfort level is.